GPT-4V: Expanding Language Models with Image Inputs

GPT-4V, or GPT-4 with vision, is a cutting-edge language model developed by OpenAI. It incorporates image inputs into its language processing capabilities, enabling users to analyze and interact with images.

Introduction

GPT-4V, or GPT-4 with vision, is a cutting-edge language model developed by OpenAI. It incorporates image inputs into its language processing capabilities, enabling users to analyze and interact with images. This new capability opens up exciting possibilities for artificial intelligence research and development.

The integration of image inputs into language models represents a significant advancement in the field of artificial intelligence. By combining text and vision, multimodal language models like GPT-4V can offer novel interfaces and capabilities, expanding their impact and enabling them to solve new tasks and provide unique experiences for users.

With the incorporation of image inputs, GPT-4V has the potential to revolutionize various domains, including medicine, scientific proficiency, and more. By leveraging the power of both text and vision, GPT-4V can offer enhanced analysis, decision-making, and problem-solving capabilities, leading to novel experiences and advancements in various fields.

Training Process of GPT-4V

The training process of GPT-4V is similar to that of GPT-4. It begins with pre-training the model to predict the next word in a document using a vast dataset of text and image data from the Internet and licensed sources. This initial training enables the model to understand the context and structure of language.

During pre-training, GPT-4V is trained to predict the next word in a document, which helps it develop a strong language understanding foundation. This process allows the model to generate coherent and contextually appropriate responses.

After pre-training, GPT-4V undergoes fine-tuning using an algorithm called reinforcement learning from human feedback (RLHF). This process involves training the model with additional data and feedback from human trainers to produce outputs that are preferred by humans. This fine-tuning enhances the model's performance and aligns it with human preferences.

To enable GPT-4V's visual capabilities, the training process incorporates a combination of text and image data from diverse sources. This integration allows the model to analyze and interpret image inputs provided by users, expanding its understanding and capabilities beyond language.

Safety Considerations for GPT-4V

OpenAI has conducted a thorough analysis of the safety properties of GPT-4V. This evaluation aims to identify and mitigate potential risks associated with image inputs, particularly concerning people and their representation.

The safety work for GPT-4V builds upon the foundation established during the development of GPT-4. Lessons learned and safety measures implemented for GPT-4 have been further refined and expanded to address the unique challenges posed by image inputs.

OpenAI has invested significant effort in evaluating, preparing, and mitigating risks related to image inputs in GPT-4V. This includes assessing potential harms, such as biased outputs or representational and allocational harms, that may arise from images of people. These measures aim to ensure responsible and ethical use of the model.

Through red team testing, OpenAI has identified certain vulnerabilities and limitations in GPT-4V's visual capabilities. These findings help inform ongoing improvements and reinforce the need for rigorous safety evaluations to address potential risks and ensure reliable performance.

Limitations and Unreliable Performance

GPT-4V's current version exhibits unreliable performance in medical purposes, particularly in radiographic imaging. Misdiagnosing conditions or inaccurately determining laterality can have severe consequences. Due to these risks and the model's imperfect performance, GPT-4V is not considered suitable for any medical function or as a substitute for professional medical advice, diagnosis, treatment, or judgment.

The limitations in GPT-4V's medical performance highlight the potential risks associated with relying on the model for critical healthcare decisions. Inaccurate diagnoses or misinterpretations can lead to harm, emphasizing the importance of caution and the involvement of medical professionals in such scenarios.

Given the unreliable performance and associated risks, GPT-4V should not be used for medical functions or as a replacement for professional medical advice. Its current capabilities are not sufficient to ensure accurate and reliable medical decision-making.

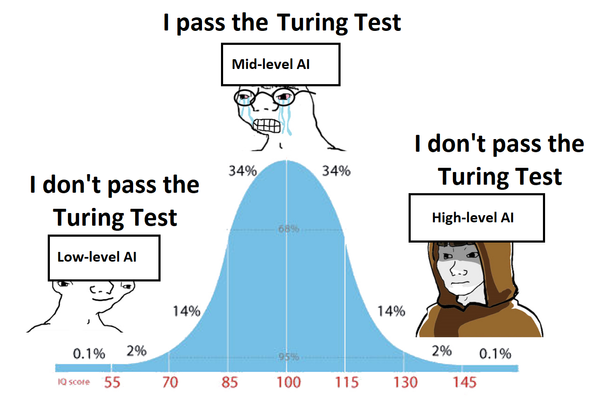

Stereotyping and Ungrounded Inferences

Using GPT-4V for certain tasks can generate unwanted or harmful assumptions that are not grounded in the provided information. These ungrounded inferences can lead to biased outputs or reinforce stereotypes about individuals or places.

In early versions of GPT-4V, red team testing frequently surfaced stereotypes and ungrounded inferences when the model was prompted to make decisions or provide explanations. This highlights the challenges in ensuring unbiased and contextually appropriate responses from multimodal language models.

The presence of stereotypes and ungrounded inferences in GPT-4V's outputs underscores the complexity of decision-making and explanation generation in multimodal models. Addressing these challenges requires ongoing research and development to improve the model's understanding and mitigate potential biases.

Conclusion

GPT-4V represents a significant advancement in language models by incorporating image inputs. It offers users the ability to analyze and interact with images, opening up new possibilities and experiences. However, it also has limitations and challenges, particularly in medical applications and avoiding biases and stereotypes.

The deployment of GPT-4V highlights the importance of continuous safety evaluations and improvements. OpenAI's commitment to assessing and mitigating risks associated with image inputs demonstrates their dedication to responsible and ethical AI development.

GPT-4V's capabilities and limitations pave the way for future advancements in multimodal language models. By addressing safety concerns, refining performance, and enhancing understanding, these models have the potential to revolutionize various industries and provide new opportunities for human-machine interactions.

Note: This article is based on the GPT-4V System Card released by OpenAI in September 2023.