Meeting Summary using GPT3 and Streamlit

Today we are building a Python web app that uses the Whisper API for automatic speech recognition (ASR) to transcribe the spoken meeting and then uses OpenAI's GPT to generate a summary of the transcript:

import openai

import whisper

# Set up your OpenAI API credentials

openai.api_key = 'YOUR_OPENAI_API_KEY'

# Set up your Whisper API credentials

whisper.api_key = 'YOUR_WHISPER_API_KEY'

def transcribe_audio(audio_path):

# Load audio file

audio = whisper.Audio.from_file(audio_path)

# Perform automatic speech recognition

transcript = whisper.asr.transcribe(audio)

return transcript

def generate_summary(transcript):

# Set the prompt for the GPT model

prompt = f"Summarize the meeting: {transcript}"

# Generate the summary using OpenAI's GPT model

response = openai.Completion.create(

engine='text-davinci-003',

prompt=prompt,

max_tokens=100,

n=1,

stop=None,

temperature=0.5,

)

summary = response.choices[0].text.strip()

return summary

# Specify the path to the audio file of the meeting

audio_path = 'path/to/meeting_audio.wav'

# Transcribe the audio

transcript = transcribe_audio(audio_path)

# Generate the summary

summary = generate_summary(transcript)

# Print the generated summary

print("Meeting Summary:")

print(summary)

Make sure to replace 'YOUR_OPENAI_API_KEY' and 'YOUR_WHISPER_API_KEY' with the actual API keys for OpenAI and Whisper. Also, ensure that you have installed the required libraries (openai and whisper) before running the script.

Example of a Streamlit web app that incorporates the code:

import streamlit as st

import openai

import whisper

# Set up your OpenAI API credentials

openai.api_key = 'YOUR_OPENAI_API_KEY'

# Set up your Whisper API credentials

whisper.api_key = 'YOUR_WHISPER_API_KEY'

def transcribe_audio(audio_path):

# Load audio file

audio = whisper.Audio.from_file(audio_path)

# Perform automatic speech recognition

transcript = whisper.asr.transcribe(audio)

return transcript

def generate_summary(transcript):

# Set the prompt for the GPT model

prompt = f"Summarize the meeting: {transcript}"

# Generate the summary using OpenAI's GPT model

response = openai.Completion.create(

engine='text-davinci-003',

prompt=prompt,

max_tokens=100,

n=1,

stop=None,

temperature=0.5,

)

summary = response.choices[0].text.strip()

return summary

def main():

st.title("Meeting Summary Generator")

# Specify the path to the audio file of the meeting

audio_path = st.file_uploader("Upload audio file", type=['wav'])

if audio_path is not None:

try:

# Transcribe the audio

transcript = transcribe_audio(audio_path)

# Generate the summary

summary = generate_summary(transcript)

# Display the generated summary

st.subheader("Meeting Summary:")

st.write(summary)

except Exception as e:

st.error(f"Error: {e}")

if __name__ == '__main__':

main()

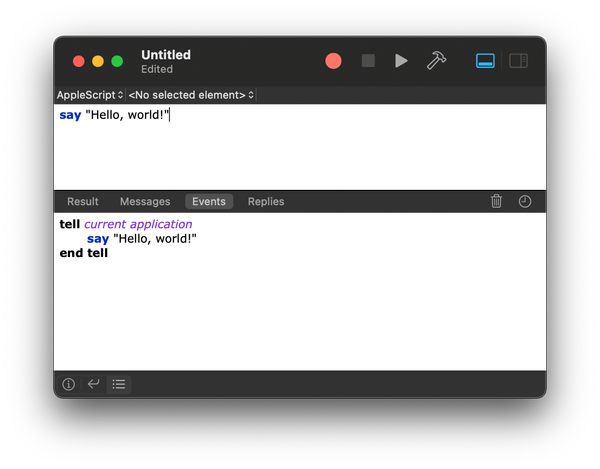

To run this Streamlit web app, save it to a file (e.g., app.py) and execute the following command:

streamlit run app.py