Exploring Natural Language Processing in Python: Techniques and Applications

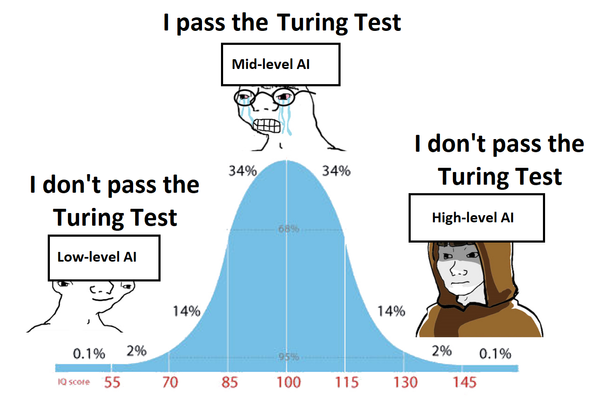

Natural Language Processing (NLP) is a subfield of Artificial Intelligence (AI) that focuses on the interaction between computers and human language. It involves the development of algorithms and models to process

Natural Language Processing (NLP) is a subfield of Artificial Intelligence (AI) that focuses on the interaction between computers and human language. It involves the development of algorithms and models to process, understand and generate human language. In this blog post, we will explore various techniques and applications of NLP using Python.

Introduction to Natural Language Processing

Natural Language Processing (NLP) is a field of study that combines linguistics, computer science and AI to enable computers to understand, interpret and generate human language. It involves tasks such as text preprocessing, tokenisation, part-of-speech tagging, named entity recognition, sentiment analysis, topic modeling, text classification and machine translation.

NLP has a wide range of applications, including chatbots, virtual assistants, sentiment analysis in social media, machine translation, information retrieval and much more.

Text Preprocessing

Text preprocessing is an essential step in NLP that involves cleaning and transforming raw text data into a format suitable for further analysis. It includes tasks such as removing special characters, converting text to lowercase, removing stop words and performing stemming or lemmatisation.

# Example code for text preprocessing

import re

import nltk

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer

def preprocess_text(text):

# Remove special characters and digits

text = re.sub(r'[^a-zA-Z\s]', '', text)

# Convert text to lowercase

text = text.lower()

# Remove stop words

nltk.download('stopwords')

stop_words = set(stopwords.words('english'))

tokens = nltk.word_tokenize(text)

tokens = [token for token in tokens if token not in stop_words]

# Perform stemming

stemmer = PorterStemmer()

stemmed_tokens = [stemmer.stem(token) for token in tokens]

return stemmed_tokens

# Example usage

text = "Natural Language Processing is a fascinating field!"

preprocessed_text = preprocess_text(text)

print(preprocessed_text)

Tokenisation

Tokenisation is the process of breaking down text into individual words or tokens. It is a crucial step in NLP as most NLP algorithms operate on a word-by-word basis. There are various tokenisation techniques available, such as whitespace tokenisation, word tokenisation and sentence tokenisation.

# Example code for tokenization

from nltk.tokenize import word_tokenize, sent_tokenize

# Word tokenization

text = "Natural Language Processing is a fascinating field!"

tokens = word_tokenize(text)

print(tokens)

# Sentence tokenization

text = "Natural Language Processing is a fascinating field! It has many applications."

sentences = sent_tokenize(text)

print(sentences)

Part-of-Speech Tagging

Part-of-Speech (POS) tagging is the process of assigning grammatical labels (such as noun, verb, adjective, etc.) to words in a sentence. POS tagging is essential for many NLP tasks, including language understanding, information extraction and text-to-speech synthesis.

# Example code for part-of-speech tagging

import nltk

text = "Natural Language Processing is a fascinating field!"

tokens = nltk.word_tokenize(text)

pos_tags = nltk.pos_tag(tokens)

print(pos_tags)

Named Entity Recognition

Named Entity Recognition (NER) is the process of identifying and classifying named entities (such as person names, organisations, locations, etc.) in text. NER is crucial for various applications, including information extraction, question answering and text summarisation.

# Example code for named entity recognition

import nltk

text = "Apple Inc. is planning to open a new store in New York City."

tokens = nltk.word_tokenize(text)

pos_tags = nltk.pos_tag(tokens)

ner_tags = nltk.ne_chunk(pos_tags)

print(ner_tags)

Sentiment Analysis

Sentiment Analysis is the process of determining the sentiment or emotional tone of a piece of text. It involves classifying text as positive, negative, or neutral. Sentiment analysis has applications in social media monitoring, customer feedback analysis and brand reputation management.

# Example code for sentiment analysis

from nltk.sentiment import SentimentIntensityAnalyzer

text = "I love this product! It exceeded my expectations."

sid = SentimentIntensityAnalyzer()

sentiment_scores = sid.polarity_scores(text)

print(sentiment_scores)

Topic Modeling

Topic Modeling is a technique used to discover hidden topics or themes in a collection of documents. It helps in organising and summarising large amounts of text data. One popular algorithm for topic modeling is Latent Dirichlet Allocation (LDA).

# Example code for topic modeling

from gensim import corpora

from gensim.models import LdaModel

documents = ["Natural Language Processing is a fascinating field!",

"Topic modeling helps in organizing and summarizing text data.",

"LDA is a popular algorithm for topic modeling."]

# Preprocess documents

preprocessed_documents = [preprocess_text(doc) for doc in documents]

# Create dictionary and corpus

dictionary = corpora.Dictionary(preprocessed_documents)

corpus = [dictionary.doc2bow(doc) for doc in preprocessed_documents]

# Train LDA model

lda_model = LdaModel(corpus=corpus, id2word=dictionary, num_topics=2)

# Print topics

for topic in lda_model.print_topics():

print(topic)

Text Classification

Text Classification is the process of assigning predefined categories or labels to text documents. It is widely used in spam filtering, sentiment analysis and document categorisation. One popular algorithm for text classification is Naive Bayes.

# Example code for text classification

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.pipeline import Pipeline

documents = ["Natural Language Processing is a fascinating field!",

"Text classification is an important NLP task.",

"Naive Bayes is a popular algorithm for text classification."]

labels = ["NLP", "NLP", "Text Classification"]

# Create pipeline

pipeline = Pipeline([

('tfidf', TfidfVectorizer()),

('classifier', MultinomialNB())

])

# Train classifier

pipeline.fit(documents, labels)

# Predict category for new document

new_document = "I am interested in Natural Language Processing."

predicted_category = pipeline.predict([new_document])

print(predicted_category)

Machine Translation

Machine Translation is the task of automatically translating text from one language to another. It involves building models that can understand the structure and meaning of sentences in different languages. One popular approach for machine translation is Neural Machine Translation (NMT).

# Example code for machine translation

from transformers import MarianMTModel, MarianTokenizer

model_name = 'Helsinki-NLP/opus-mt-en-de'

tokenizer = MarianTokenizer.from_pretrained(model_name)

model = MarianMTModel.from_pretrained(model_name)

text = "Hello, how are you?"

input_ids = tokenizer.encode(text, return_tensors='pt')

translated_text = model.generate(input_ids)

decoded_text = tokenizer.decode(translated_text[0], skip_special_tokens=True)

print(decoded_text)

Conclusion

Natural Language Processing is a fascinating field that enables computers to understand, interpret and generate human language. In this blog post, we explored various techniques and applications of NLP using Python. We covered text preprocessing, tokenisation, part-of-speech tagging, named entity recognition, sentiment analysis, topic modeling, text classification and machine translation. With the powerful tools and libraries available in Python, NLP has become more accessible and easier to implement in real-world applications.