Ethical Implications of Artificial Intelligence

AI has made significant advancements in recent years, revolutionising various industries and transforming the way we live and work. However, with these advancements come ethical implications that need to be carefully considered.

Introduction

AI has made significant advancements in recent years, revolutionising various industries and transforming the way we live and work. However, with these advancements come ethical implications that need to be carefully considered. In this article, we will provide a comprehensive review of the ethical implications of AI and discuss the challenges and potential solutions.

Ethical Concerns

Bias and Discrimination

One of the major ethical concerns surrounding AI is the potential for bias and discrimination. AI systems are often trained on large datasets that may contain biased information, leading to biased outcomes. For example, if an AI system is trained on historical data that reflects societal biases, it may perpetuate those biases in its decision-making process. This can have serious consequences in areas such as hiring, lending and criminal justice.

To address this concern, it is crucial to ensure that the training data used for AI systems is diverse and representative of the population. Data collection should be done with careful consideration to avoid underrepresentation or overrepresentation of certain groups. Additionally, ongoing monitoring and evaluation of AI systems can help identify and rectify any biases that may arise. This can be achieved through regular audits and assessments of AI algorithms to ensure fairness and non-discrimination.

Privacy and Security

AI systems often rely on vast amounts of data to make accurate predictions and decisions. However, this raises concerns about privacy and security. Personal information collected by AI systems can be vulnerable to misuse or unauthorised access. Additionally, AI systems that process sensitive data, such as medical records or financial information, must ensure the highest level of security to protect individuals' privacy.

To mitigate these concerns, organisations must implement robust data protection measures, including encryption, access controls and regular security audits. Privacy by design principles should be incorporated into the development of AI systems, ensuring that privacy considerations are taken into account from the outset. Transparency and informed consent should also be prioritised to ensure individuals are aware of how their data is being used and have control over their personal information.

Accountability and Transparency

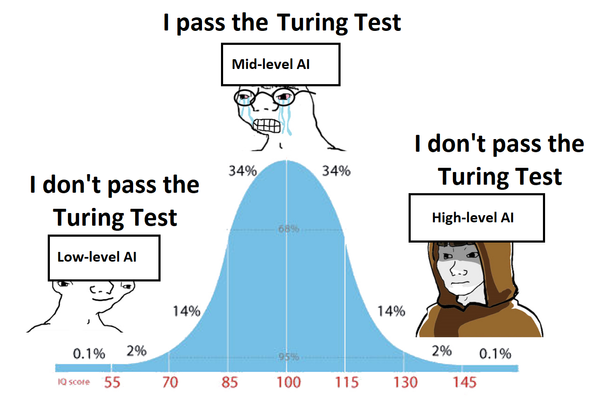

AI systems can be complex, making it difficult to understand their decision-making processes. This lack of transparency raises concerns about accountability. If an AI system makes a biased or discriminatory decision, it may be challenging to identify the responsible party or hold them accountable.

To address this, organisations must prioritise transparency in AI systems. This includes providing explanations for AI decisions, ensuring transparency in the training process and making the source code accessible for auditing. Explainable AI (XAI) techniques can be employed to make AI systems more transparent and interpretable. These techniques aim to provide insights into the decision-making process of AI systems, allowing for better understanding and accountability. Regulatory frameworks can also play a vital role in holding organisations accountable for the ethical implications of their AI systems, by establishing guidelines and standards for transparency and accountability.

Job Displacement and Economic Inequality

The rapid advancement of AI technology has raised concerns about job displacement and economic inequality. AI systems have the potential to automate various tasks, leading to job losses in certain industries. This can exacerbate existing economic inequalities and create new challenges for individuals who are unable to adapt to the changing job market.

To address this concern, it is crucial to invest in reskilling and upskilling programs to ensure individuals are equipped with the necessary skills to thrive in an AI-driven economy. Education and training initiatives should be developed to help individuals transition into new roles and industries. Governments and organisations can collaborate to provide support and resources for individuals affected by AI-driven job displacement. Additionally, policymakers must consider implementing social safety nets and policies that promote equitable distribution of AI-generated wealth. This can include measures such as universal basic income or job guarantees to mitigate the impact of job displacement and ensure economic stability.

Conclusion

As AI continues to advance, it is essential to carefully consider the ethical implications associated with its use. Bias and discrimination, privacy and security, accountability and transparency and job displacement and economic inequality are among the key concerns that need to be addressed. By proactively addressing these ethical implications, we can ensure that AI technology is developed and deployed in a way that benefits society as a whole.